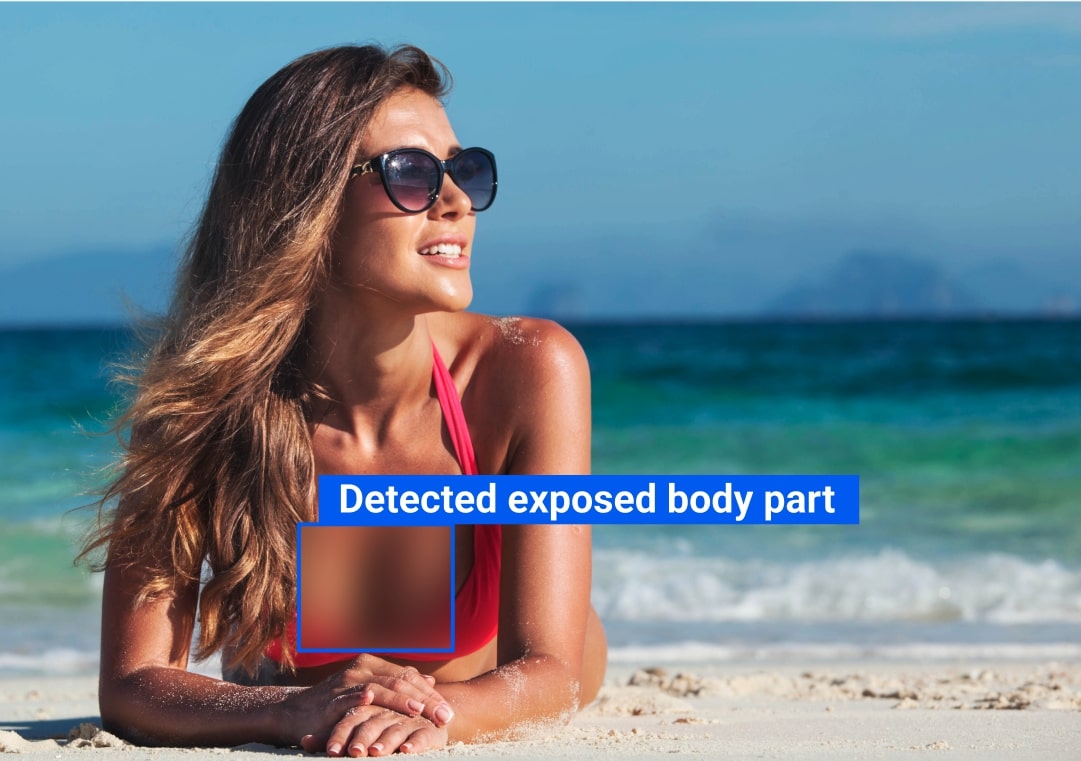

How Does NSFW Detection API Work?

Find and classify inappropriate content that is not safe for work. The default threshold for all classes is 0.6.

Our NSFW API detects 15+ classes including:

- EXPOSED_ANUS

- EXPOSED_BUTTOCKS

- EXPOSED_BREAST_F

- EXPOSED_GENITALIA_F

- EXPOSED_GENITALIA_M

NSFW Images Detection API Use Cases

Forum Moderation

Forum Moderation

Automatically identify and moderate explicit or offensive images and prevent users from spamming the channel with inappropriate content.

Messaging Apps

Messaging Apps

Prevent users from accessing NSFW content in chats and ensure secure communication via messaging apps.

Comment Moderation

Comment Moderation

Instantly recognize potentially offensive content and keep the comment section safe from harmful mentions and abusive messages.

Digital Ads

Digital Ads

Find and block offensive or adult content in digital ads before they are delivered using inappropriate image detection technology.

E-commerce & Marketplaces

E-commerce & Marketplaces

Keep your marketplace free from NSFW content and safe for your users. Automatically flag any adult content and protect your brand reputation.

Dating Websites

Dating Websites

Enable a safe environment for users that are vulnerable to explicit, offensive, and inappropriate content by potential abusers. Keep your dating site content clean and abuse-free.

Stock Websites

Stock Websites

Moderate user-generated content to detect any potentially harmful content. SmartClick’s NSFW API makes it easy to prevent any inappropriate image from being shared.

Video Game Streaming Platforms

Video Game Streaming Platforms

Use our nudity detection API to catch any adult content in live video game streaming and make sure to publish only safe content.

Pricing

FAQ

SmartClick is a full-service software provider delivering artificial intelligence & machine learning solutions for businesses.