Head Pose Estimation

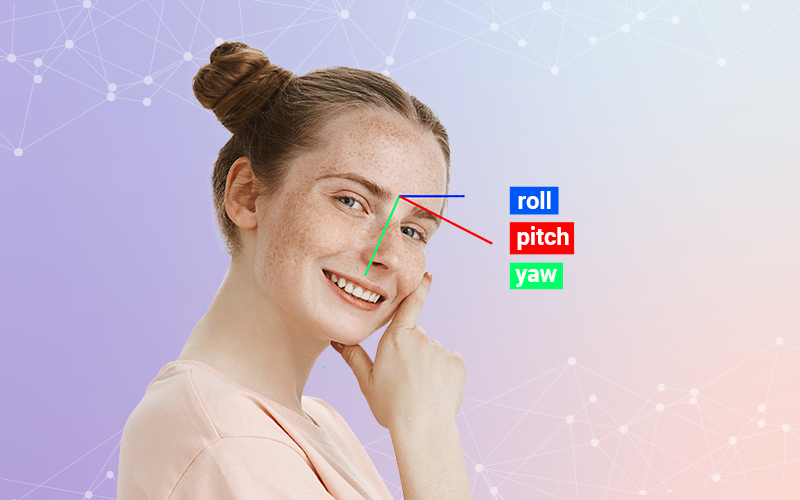

Head pose estimation is a technology that detects the pose of a human head in an image. The technology uses yaw, pitch, and roll angles of a head movement to predict the right position of the human’s head in real time.

First, the system takes the 2D facial landmarks in the image, e.g., eyes, lips, chin, a nose tip, and returns the coordinates of the corresponding landmarks in the image. The next steps are to fit a 3D human face model with its 2D objections in the image and calibrate the camera to identify rotation and translation vectors that characterize the correlation between the real-world and camera coordinate systems.

Main Function

The model recognizes all of the faces in an image and uses each one as an input to analyze the posture of the head. The model outputs the coordinates of each face as well as the 3D pose with yaw, pitch, and roll values.

Input

The input is an image.

Output

The output is a list of dictionaries containing all face coordinates and detection probabilities. The detected face position is in the form of the bottom-right and top-left coordinates of the frame. The corresponding face dictionary has two values for yaw, pitch, and roll for each face.

How Does the Head Pose Estimation Work?

The model recognizes all of the faces in an image and uses each one as an input to analyze the posture of the head. The model outputs the coordinates of each face as well as the 3D pose with yaw, pitch, and roll values.

Technologies We Use To Build this API

The technology is based on detection technology and mathematical estimations. 2D locations of several facial landmarks are detected by keypoint detection technology. The facial landmarks include the coordinates of the eyes, eyebrows, nose tip, lips, and chin. Given the facial landmarks, 3D pose estimation is generated, and the head is tracked from the point on.

For example

[

{

"probability": 0.9999996423721313,

"rectangle": {

"left": 89.36750197336077,

"top": 57.552113214756055,

"right": 293.84930589000385,

"bottom": 350.8455882774045

},

"direction": {

"yaw": 0.9754817470297894,

"pitch": -1.840920759566032,

"roll": 0.9923179751837665

}

}

]

Why Work with SmartClick?

Easy to Integrate

Our head pose estimation API enables quick and smooth integrations across various platforms and applications. We have made our API technology easy and simple for you to connect to any system without additional resources.

Professional Team

We are a team of dedicated professionals who bring their deep domain knowledge and strong expertise to create solutions that cater to unique business requirements.

Customizable Product

Our APIs are easily customizable according to specific business models to suit the current needs and challenges. With the help of our in-house AI technology, we deliver the best solutions tailored to your business.

Pricing

basic

$0.00/mo

1,000 /month

30 requests per minute

pro

$69.99/mo

45,000/month + $0.003 each other

60 requests per minute

ultra

$299.00/mo

250,000/month + $0.0015 each other

80 requests per minute

FAQ

SmartClick is a full-service software provider delivering artificial intelligence & machine learning solutions for businesses.